Introduction

Understanding AI Maturity: A Framework for Business Leaders

By Hindol Datta/ July 12, 2025

Provides a multi-level maturity framework to assess readiness across data, culture, leadership, and AI governance.

Why AI Readiness Is Not a Tech Question, It Is a Leadership One

Over the past three decades, I have led transformations in finance, analytics, and operations across various industries, including SaaS, logistics, healthcare, and professional services. I have seen cloud adoption stumble because of culture, not code. I have seen business intelligence tools gather dust because leaders did not ask the right questions. And now, as companies rush to embrace artificial intelligence for business, AI for business leaders, and artificial intelligence business applications, I see a familiar pattern repeating itself where technology outpaces understanding, and strategy lags behind capability. The challenge is no longer just implementation; it is aligning AI and business to deliver real value.

The most critical question today is not whether a company is using AI. It is whether the company is ready for AI maturity in strategy, operations, data readiness, governance, and culture without creating chaos, compliance risk, or organizational drag. AI readiness is defined by how well your systems integrate artificial intelligence, how clean your data pipelines are, the level of trust in leadership, and whether your governance architecture supports accountability.

As AI begins to touch every function, from sales forecasting and contract review to product innovation and investor communications, boards and CFOs must treat AI maturity and AI capability as strategic assets. In an environment where capital is expensive and competitive advantage depends on speed, AI readiness becomes a proxy for leadership quality and long-term performance.

Why Boards and CFOs Must Own the AI Readiness Question

CFOs and boards are stewards of capital risk and strategic priority: artificial intelligence cuts across all three. Poorly deployed AI introduces regulatory exposure, data privacy risks, biased insights, and operational fragility. Done well, AI maturity yields leverage, speed, better decision-making, and sustainable competitive advantage through better forecast accuracy, improved operations, and trust in models.

AI maturity is not a technical project. It is an enterprise condition. It encompasses data architecture, decision velocity, leadership trust model, governance frameworks, ethics and compliance, system theory in design, and cultural willingness to adapt. It deserves structured board-level oversight and regular maturity assessment.

To help with that, I have developed a five-level AI maturity model framework to help founders, CFOs, and board members evaluate their readiness level in AI governance, data maturity, leadership alignment, culture, and operational systems.

Level 1: Experimental – Curiosity Without Control

Most early-stage companies begin here. A few teams have started using ChatGPT or a code assistant. Marketing may be utilizing generative AI to create content. Sales might be summarizing call notes. But there is no enterprise AI strategy, no oversight, and no shared vocabulary.

Symptoms:

- Ad hoc usage of GenAI or large language model tools.

- No documentation of how AI outputs are used or governed.

- Data privacy risks are unmanaged.

- No designated AI governance lead or point person.

Risks:

- Shadow AI usage leading to data leaks or brand inconsistency.

- Legal or compliance exposures.

- Inconsistent messaging, internal or external.

Board questions to ask:

- Who is using AI tools now and in what ways?

- Are any customer-facing materials generated by AI without review?

- Has legal or security expert assessed those tools?

Level 2: Functional – Early Wins Siloed Initiatives

Departments begin to operationalize AI in specific workflows. Finance may use AI for variation analysis. Legal might try contract summarization. But initiatives are isolated, and there is no enterprise-level alignment on governance, ethics, or auditability.

Symptoms:

- Department-level AI experiments.

- Tools embedded in SaaS products but not overseen by IT compliance.

- Early productivity gains, but results are inconsistent.

Risks:

Duplication of effort across teams.

- Conflicting metrics definitions.

- Absence of escalation paths when AI outputs are wrong or misleading.

Board questions to ask:

- Which teams are operationalizing AI? What value are they creating?

- Are we measuring time saved cost reductions or risk mitigations?

- Do we have policies for AI usage exceptions and quality control?

Level 3: Operational – Strategy With Guardrails

This level is where companies become truly AI aware. There is a defined governance structure often led by the CFO, CTO, or COO. Use cases are prioritized based on data access risk and expected value. Decision metrics tracked for human-in-the-loop policies for critical outputs. AI becomes a lever, not just a novelty.

Symptoms:

- Cross-functional AI governance committee.

- Standardized evaluation criteria for new AI tools.

- Human-in-the-loop review for high-risk outputs.

- Alignment across data operations, legal, and systems teams.

Capabilities:

- AI-generated forecasts reviewed by finance.

- Generative AI summaries for investor relations approved by legal.

- AI-driven product experiments with measurement telemetry.

- Data maturity via clean infrastructure pipelines.

Board questions to ask:

- Which is our top AI-enabled workflows? What is their ROI?

- Who owns AI governance, and what authority and accountability do they hold?

- Are we logging override events, error rates, or model retraining?

Level 4: Strategic – Embedded Trusted Auditable

AI is part of the operating fabric. Functions across the organization include embedded AI agents with clearly defined roles and confidence thresholds. Outputs are explainable, documented, and continuously monitored. Business decisions are shaped by AI-generated insight, not just supplemented by it.

Symptoms:

- Scenario planning includes AI-driven simulations.

- Decision logs contain AI-agent recommendations and human overrides.

- Board materials include AI performance metrics.

- System theory is used to design feedback loops for improvement.

Benefits:

- Faster time to decisions.

- Improved forecast accuracy.

- Lower operational overhead in routine tasks.

- Stronger compliance and risk management.

Board questions to ask:

- How often do AI agents influence strategic decisions?

- Have we benchmarked AI ROI across all departments?

- What transparency do we provide to investors about how AI is used?

Level 5: Autonomous – Self Learning Systemic Audited

Very few companies have reached this level. But the most competitive ones will. AI agents orchestrate complex decisions across systems. Feedback loops are automated. Strategic questions are framed by models debated by humans and implemented with adaptive playbooks. AI capability becomes part of the identity of the organization.

Characteristics:

- Multi-agent architectures coordinate across finance operations, sales, and product.

- Every AI decision is logged, explained, and improved through feedback.

- Board level visibility into failure modes, performance metrics, and governance health.

- Regulatory readiness built in, not reactive.

Implications:

- Strategic advantage compounds.

- Human roles shift toward judgment, design system thinking, and exception handling.

- Capital allocation becomes more confident and more directed.

- Leadership capacity improved because AI frees bandwidth for insight, not busy work.

Board questions to ask:

- What is our AI decision hierarchy? Where are humans required, and where are they optional?

- Do we maintain external audit trails for AI usage in regulated decisions?

- Is AI improving leadership capacity or adding complexity?

Mapping the Maturity Curve to Capital Strategy

AI maturity is not just about cost saving or technical efficiency. It is about clarity in capital allocation hypotheses. Companies at higher maturity test more hypotheses, make faster decisions, iterate more frequently, and allocate funds with greater conviction. They rely less on ad hoc intuition and more on data-driven system theory-informed models.

As CFO, I incorporate AI maturity into my capital strategy. Teams with strong AI readiness receive greater autonomy, faster funding, and tighter governance. Teams in experimental stages get design support and measured oversight.

Boards should request regular AI maturity reporting, just as they do for financial audits or security reviews. This signals discipline and builds trust among investors and stakeholders.

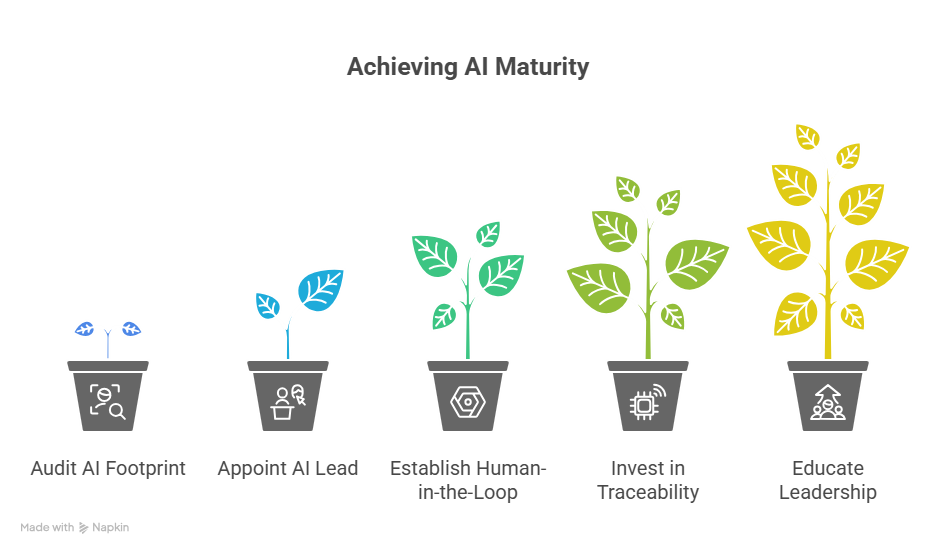

Five Immediate Steps to Move Up the Maturity Curve

- Audit your AI footprint survey current use cases, tools, workflows, risks, and gaps.

2. Name an AI governance lead, someone with cross-functional authority, not just in IT.

3. Establish human-in-the-loop rules that define which outputs require human review and document the override process.

- Invest in traceability and explainability, and capture inputs, logic path, decisions, and outcomes.

- Educate the board and leadership on AI maturity metrics. Share cases, invite scrutiny, and foster a culture of transparency.

Closing Thought: Readiness Is a Leading Indicator

AI will not wait for organizational comfort. The companies that succeed will not be the fastest adopters. They will be the most prepared structurally, culturally, and operationally.

Being AI-ready does not mean deploying every tool. It means knowing which decisions require speed, which require trust, and where intelligence should flow. AI maturity is not nice to have. It is essential to competitive leadership and resilient design.

Hindol Datta, CPA, CMA, CIA, brings 25+ years of progressive financial leadership across cybersecurity, SaaS, digital marketing, and manufacturing. Currently VP of Finance at BeyondID, he holds advanced certifications in accounting, data analytics (Georgia Tech), and operations management, with experience implementing revenue operations across global teams and managing over $150M in M&A transactions.