Introduction

From Forecasts to Hypotheses: Rethinking AI in Decision Making

By Hindol Datta/ July 12, 2025

Explores agent explainability, reproducibility, and how Boards should view AI-augmented forecasts not as truth but as testable hypotheses

Introduction Embracing Order in Chaos

In my thirty years of finance, operations, and systems leadership, I have lived in moments of chaos. When I was at Accenture advising clients on complex systems integration, I saw projects with dozens of moving parts, unpredictable external shocks, and always tight timelines. At GN ReSound, managing operations in medical devices and manufacturing, the variability of the supply chain, margin pressures, and global regulatory shifts created many “chaotic” states. And when I serve United Way as an advisor, I observe social systems where small changes in community input or funding availability can shift outcomes widely. Today, with AI decision making and artificial intelligence in business decision making emerging as core enablers of resilience, leaders are beginning to see how decision making AI can help navigate complexity, simulate uncertainty, and bring structure to chaos.

Systems theory teaches us that complex adaptive systems often produce emergent order. That chaos is not a lack of structure but sensitive dependence on initial conditions. For AI decision making and forecasting, the same is true inputs matter. Models matter. And mindset matters. If a Board believes that the forecast is reality without recognizing model assumptions, input quality, or logic paths, then mistakes happen. But if the mindset shifts from forecasts as static predictions to forecasts as testable hypotheses, then we harness both speed and rigor.

In this blog, I explain how synthetic analysts (AI agents) work, how Boards and executives can evaluate them, how to build explainability and reproducibility into your decision systems, and why ROI on AI flows from disciplined hypothesis management, not just from better tools. I draw on my time at Accenture, GN ReSound, BeyondID, logistics companies, and my advisory work at United Way to give examples.

What is a Synthetic Analyst

A synthetic analyst is an AI agent trained on business rules, historical data systems of record, and logic flows. It ingests data from various finance operations, product, customer, and legal functions, among others. It runs simulations, it proposes insights, it risks, it deviations, and it summarizes outputs. It is not human, but it collaborates with humans. It supports decision-making.

Traditional analysts build spreadsheets, slide decks, and reconcile data monthly or quarterly. Synthetic analysts can produce forecasts on an hourly or real-time basis. They detect unusual data points or anomalies. They highlight deviation from the trend. They propose corrective action.

At GN ReSound, for example, I saw how variability in manufacturing cost per unit was affecting gross margin. At that time, without such synthetic analysts, we had to rely on monthly close and manual variance analysis. With synthetic forecasts, we could have spotted supplier cost fluctuations earlier.

At BeyondID, we have built predictive models that combine backlog data, pipeline velocity, utilization, and contract terms. Those models become hypotheses we test.

At United Way, my advisory role often involves recommending metrics. I have seen how synthetic models could forecast donation flows based on economic indicators and donor sentiment. But the difference comes when those forecasts are treated not as commitments but as hypotheses to validate.

Why Forecasts as Hypotheses Matter

Forecasts are robust, but they are only as good as their assumptions. When executives treat them as fixed, they invite risk. When the model’s inputs shift or unexpected events happen, the forecast fails.

In chaotic environments, small changes in initial conditions ripple through systems. A logistic disruption. A regulatory shift. A new competitor. These are sensitive dependencies. Systems theory suggests that in complex adaptive systems, you cannot predict every move. But you can build resilience by continually updating your hypotheses.

Viewing forecasts as hypotheses means you ask: What assumptions underlie this prediction? What might change? What must hold for this forecast to be valid?

Key Components Boards Must Expect in Synthetic Analysts

When you introduce AI agents into forecasting, planning, capital allocation, or risk assessment, you must ensure certain features are built into those synthetic analysts.

- Explainability of Outputs

Every forecast or recommendation must list input data sources, the timeframe, data confidence, the logic used, the rules applied, and boundary conditions

- Reproducibility and Version Control

You must be able to recreate the model state as it was when the recommendation was made. Versioned dataset inputs, model pipelines, prompt or rule templates, etc

Logic Transparency

What triggered a recommendation or anomaly detection? Was it a change in customer behavior? Was it a change in supplier costs? Was it a drift in usage metrics?

Counterfactuals Considered

What did the synthetic analyst choose not to recommend and why? What alternatives did it consider?

- Human-in-the-Loop Design

Some decisions require human override. Some decisions are final only if confidence thresholds are met. Human review remains essential in regulated domains, such as finance, legal, etc.

- Audit Trails and Overrides

Track when humans override agent outputs. Keep logs of errors, model drift, and retraining.

- Scenario Simulation and Decision Trees

Synthetic analysts should present multiple paths: base case, upside case, downside case, and probabilities.

How to Assess ROI on AI Forecast Systems

Return on investment in AI decision systems is not just about cost savings. It is value unlocked. Here are ways Boards and CFOs should assess ROI:

- Forecast variance reduction: How much less errors does the model produce compared to historical forecasts

- Decision latency reduction: How much faster can decisions be made because a synthetic analyst surfaces issues or opportunities earlier

- Cost of manual effort shifted: How many hours of finance, operations, legal, and HR work are replaced or elevated by oversight instead of manual processing

- Risk mitigation: How much less exposure to surprise risk or negative deviations

- Strategic optionality: How quickly can you test hypotheses and adapt when conditions change

At BeyondID our predictive models reduced forecasting error by nearly 30%. That freed up finance team hours, which then got redirected to scenario planning and capital allocation.

In a logistics company in Berkeley, revenue shocks from seasonality used to cause large swings in cash flow forecasting. Synthetic forecasts enable management to test multiple scenarios in real-time, adjusting assumptions such as fuel costs or demand shifts. That produced better alignment with investors and more stable cash burn.

At GN ReSound, we employed similar hypothesis testing when supplier cost inflation began to rise sharply. Forecasts that included counterfactuals helped us decide whether to hedge costs or renegotiate with suppliers early.

Systems Theory Chaos Order Insights for Decision Mindset

Systems theory gives us tools to think about business like living systems. Chaos often looks random but contains underlying patterns. In business, that means data noise might contain early signals of risk or opportunity. Synthetic analysts help see those signals.

From my work at Accenture, I learned that designing systems to capture early warnings is critical. Early on, systems have high sensitivity. Later, you build stability.

In complex environments, small perturbations can lead to significant outcomes. The logistics firm in Berkeley taught me that unexpected delays in delivery from one supplier cascaded into stockouts and caused customer dissatisfaction. A forecasting hypothesis that included supplier disruption risk helped avert margin erosion.

Order arises when feedback loops exist. When synthetic agent outputs are reviewed, overrides are logged, and the model is retrained. That is finding order in chaos. ROI comes when you build those loops.

Real World Example: BeyondID Forecasting System

At BeyondID, we built a forecasting engine woven into pipeline data, backlog contract terms, and utilization metrics. We treated every forecast as a hypothesis. We ran scenario branches: base case for pipeline holds steady, downside if cancellations increase, upside if utilization improves.

We built explainability layers: for each forecast, we stored which inputs changed what assumptions. We logged overrides. Analysts became reviewers. Controllers became curators of trust.

Result: forecasting variance dropped by about thirty percent, decision latency (the time to decide on a course correction) decreased by two weeks, and finance staff time saved increased by fifteen percent. Board feedback improved. Investors felt more confident. We reallocated cost savings into product development, not just cost-cutting.

Example: Berkeley Logistics Company

I worked with a logistics company in Berkeley that is generating about forty million in revenue, which deals with warehousing, last-mile delivery, and freight. Since I was the CFO at Lifestyle Solutions, it was easy to port over that experience and apply it to the company which is logistics in the food industry. Seasonality and fuel cost swings create chaotic cost environments.

We introduced synthetic analysts to forecast fuel cost trends, delivery delays, and customer demand patterns. The model included counterfactuals. We asked: what if fuel costs spike, what if demand drops by ten percent, what if supplier delays grow.

We built audit trails and version control. Human-in-the-loop in financial close. Overrides flagged. Predictions compared to actual, four times per month, not just quarterly.

Outcomes: less inventory overstock and under-utilization in warehouses, smoother cash flow, fewer surprises on margin. ROI came from avoided waste and improved margin stability.

Example: United Way Advisory Forecasting

In my advisory role at United Way, I reviewed forecasting of donation flows and program spend. Traditional models assumed linear growth and fixed donor retention. But economic factors and donor sentiment vary.

We built synthetic agent models that considered counterfactuals: what if donations drop by 15 percent, what if a new campaign boosts retention by 10 percent, and what if program costs experience 5 percent inflation. Inputs included macroeconomic indicators and social media sentiment data.

We insisted on explainability: every recommendation showed what assumptions were used, what data sources, and what logic. When predictions failed, we reviewed which assumption or data input had drifted. That process helped United Way improve its donor engagement strategy and budget more resiliently.

Governance and Board Oversight

Boards now must adjust their oversight mindset. It is no longer enough to review revenue growth, margin, and customer metrics. AI decision systems require governance around logic, risk, data quality, and bias.

Boards should ask:

- Which synthetic analysts are active in our systems decision processes

- What explainability framework do we have for agent output logic, assumptions, and data source confidence levels

- How often are forecasts recalibrated when outcomes diverge materially

- What is our override rate, and what patterns emerge from overrides

- Are audit trails maintained with version control, traceability, and reproducibility

At Accenture, I observed that clients with strong audit practices performed more effectively under stress. At GN Resound, I led internal control development, and in projects, we designed for risk early. Even in nonprofit work at United Way, oversight and transparency-built trust among stakeholders.

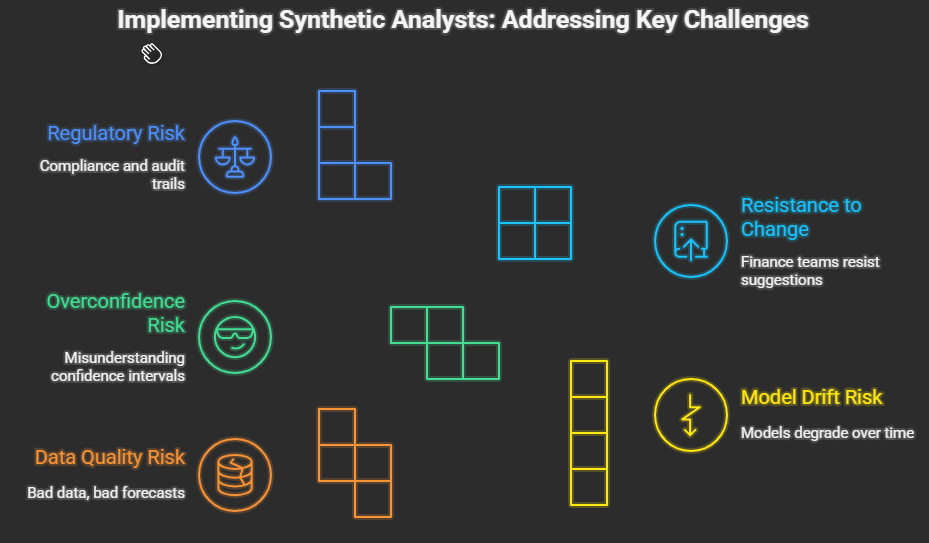

Challenges and How to Address Them

Implementing synthetic analysts and treating forecasts as testable hypotheses is not free of challenges.

- Data Quality Risk: Bad data produces bad forecasts. You must ensure data pipelines, data governance, and cleaning are strong.

- Model Drift Risk: Over time, models may degrade if inputs change. Automatic retraining, monitoring override rates needed.

- Overconfidence Risk: Boards must understand confidence intervals, not just point estimates.

- Resistance to Change: Finance teams used to manual spreadsheets may resist agent suggestions. You need change management.

- Regulatory or Compliance Risk: Especially in finance, legal, and healthcare, you must ensure audit trails, explainability, legal compliance, etc.

Addressing them requires investing in governance, human-in-the-loop design, transparency, versioning, oversight, and culture change.

Mindset Shift: From Certainty to Continuous Learning

When I worked at GN ReSound, we saw that forecasting accuracy improved when we embraced error as feedback. When United Way adjusted expectations by testing hypotheses rather than treating the forecast as a promise, more donors got engaged. At BeyondID, we built systems to learn from every forecasting error.

Systems theory suggests that systems that adapt perform better over time. Chaos theory suggests that minor deviations are opportunities to learn. Synthetic analysts, when designed well, give you those deviations early.

Metrics Boards and Execs Should Track

To manage ROI and trust in the synthetic forecasting systems track:

- Forecast error or variance ratio compared to the historical baseline

- Decision latency: how fast you act when alerts or anomalies appear

- Override rate and patterns in overrides

- Model retraining frequency and decay metrics

- Input data freshness and data confidence measures

Counterfactual scenario usage: how often upside/downside cases are considered

Financial outcome divergence: compare actuals against forecast hypotheses

These metrics are not vanity metrics. They are signals of system health and value creation.

Conclusion: Responsible Synthetic Intelligence as Strategic Asset

In a world shaped by rapid change, complexity, and uncertainty, synthetic analysts represent a new class of tools for Finance operations and leadership. When forecasts are treated as hypotheses, when explainability reproducibility, human oversight, and governance are baked in, then ROI on AI forecasts becomes real.

In my experience at Accenture GN ReSound , BeyondID, and the Berkeley logistics company, and my advisory work at United Way, too, I have seen what works. Speed without clarity fails. Accuracy without learning decays. Intelligence without governance is brittle.

The next generation of companies will not be those with the most data or the fastest models. They will be those with the most straightforward decision logic, the strongest hypothesis pipelines, the best audit trails, and the culture to treat forecasts not as truth but as experiments.

Boards must evolve. Leaders must embrace synthetic analysts and build systems of hypothesis testing. If you do, you find order in chaos, ROI becomes visible and intelligence becomes a core part of value creation.