Introduction

AI Governance: Transforming Capital Allocation Strategies

By Hindol Datta/ July 11, 2025

Discusses how AI reshapes the capital budgeting process, particularly in SG&A, R&D, and Customer Experience.

From Curiosity to Fiduciary Duty

Artificial Intelligence is no longer an R&D topic or a back-office efficiency play. It sits squarely within the realms of enterprise risk, strategic advantage, and regulatory exposure. For boards of Series A through Series D companies, the question is no longer whether to engage, but how to govern effectively. AI is not simply a tool it is a decision system. And like any system that influences financial outcomes, customer trust, and legal exposure, it demands structured oversight through AI governance, supported by the right mix of generative AI consultants, AI technology services, and experienced AI consulting to align innovation with accountability.

Boards must now treat AI with the same discipline they apply to capital allocation, M&A diligence, and cybersecurity. This is not a technical responsibility. It is a governance imperative.

Why Boards Can No Longer Stay Silent on AI

The emergence of intelligent agents that perform financial forecasting, customer interaction, legal document review, and risk scoring creates a new kind of operational leverage but also introduces a new layer of systemic risk. AI models are dynamic. They are probabilistic. They learn and adapt. They may hallucinate. They may encode bias. And unlike human operators, they do not always explain their reasoning.

In one company I advised, an AI-driven pricing assistant proposed a multi-tiered pricing change that, while mathematically sound, introduced legal risk due to regional price discrimination laws. No one had considered vetting the model through a legal lens. The output was live before risk was even considered.

The lesson was clear: AI can act faster than governance, unless governance is actively embedded.

Five Areas Boards Must Now Monitor Closely

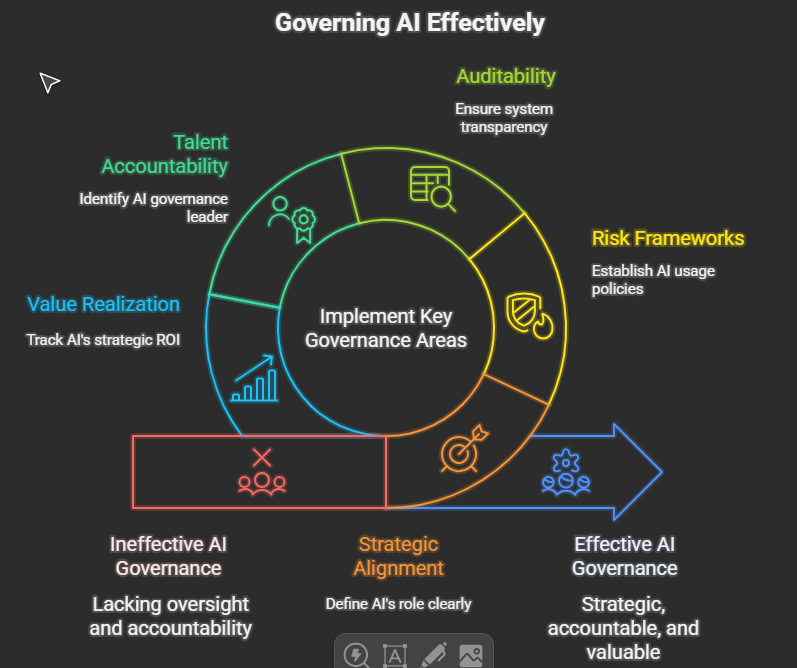

To govern AI effectively, boards must anchor their oversight into five key areas: strategy, risk, auditability, talent, and value realization. Each deserves explicit attention, recurring visibility, and structured inquiry.

Here is a visual that might help. Details about this visual follow:

1. Strategic Alignment: Is AI Central or Peripheral?

Boards must ask: What role does AI play in the company’s core value proposition? Is it foundational to the product, or merely an efficiency layer? If it is central, governance must go deeper. If it is peripheral, boards must ensure it doesn’t introduce disproportionate risk.

For example, a GenAI engine powering a legal search platform carries very different exposure than an AI-enabled expense categorization tool. One may affect contract interpretation. The other optimizes T&E coding. Both use AI. But only one touches critical judgment.

Boards should request an AI Materiality Matrix: a map that shows where AI touches customers, decisions, revenue, and risk. That map should evolve as the product evolves.

2. Risk and Policy Frameworks: Is There a Playbook for AI Use?

Too many companies deploy AI without an explicit policy. Boards must demand one. This policy should cover: model selection criteria, acceptable use, prohibited use, bias mitigation strategies, privacy protections, third-party dependency risks, and fallback protocols.

For instance, if GenAI powers a customer service agent, what happens when the model misclassifies a complaint? Who owns the correction? Is it auditable? Is it remediable?

Boards should also ask if the company has mapped its AI exposure to regulatory regimes: GDPR, CCPA, HIPAA, and emerging global AI laws like the EU AI Act. Compliance may not be required yet—but readiness signals maturity.

3. Auditability and Explainability: Can the System Be Trusted?

Any AI system that affects customers, employees, or financial outcomes must be auditable. That means the company must maintain model logs, decision traces, override capabilities, and a method to explain why a decision was made.

In one AdTech company, we discovered that a GenAI recommendation engine was optimizing for click-throughs at the expense of user experience. No one knew how the model had made that tradeoff. There was no audit trail. We had to retrain the system and rebuild trust.

Boards must ask: What mechanisms exist for AI explainability? Can humans override AI decisions? Can the company reproduce results under scrutiny?

In regulated industries, this becomes not just good governance but survival.

4. Talent and Accountability: Who Owns AI in the Org?

AI systems need stewardship. Boards must ensure there is a clearly identified AI governance leader: ideally reporting to the CEO, CFO, or Chief Risk Officer, who owns oversight of AI projects.

In smaller firms, this may be a cross-functional AI committee that includes finance, legal, engineering, and product. The board must ensure that someone is accountable not just for deploying AI, but for monitoring its behavior, documenting its evolution, and correcting its errors.

The question to ask is: who is on point if the model goes wrong?

Without that clarity, AI risk becomes an orphaned liability.

5. Value Realization: Is AI Delivering Strategic or Financial ROI?

Boards must differentiate between AI as novelty and AI as leverage. Ask not just what is being automated, but what is being improved. Are decisions faster? Is forecast accuracy better? Is the risk reduced? Is the margin enhanced?

At one nonprofit I assisted, an AI system was implemented to enhance grant application triage. It succeeded in cutting review time by 40 percent. I mentioned this in another article. I am reinforcing it here since I feel it is very applicable. But we failed to measure the quality of outcomes. Grant rejection accuracy dropped. False positives increased. The system is optimized for speed, not fairness. We had to roll back.

Boards should expect AI ROI to be tracked with rigor: quantified impact, timelines, and cost-benefit ratios. Vanity metrics will not suffice. Strategic alignment and clear KPIs must drive AI investments.

AI-Specific Questions Boards Should Be Asking Now

- What AI models are currently deployed, and where do they impact customers or financial outcomes?

- What unique data are we using to train these models, and how is that data protected?

- How do we handle AI errors—both technically and reputationally?

- What oversight mechanisms exist to govern AI updates, model drift, and re-training?

- What happens if the vendor hosting our AI goes offline or changes terms?

- Are we prepared to explain and defend AI-driven decisions in a legal or regulatory context?

- Do we have a clear, evolving strategy for integrating AI into our product roadmap and internal operations?

Embedding AI Governance into the Board Agenda

Just as cybersecurity now appears as a recurring board topic, so too must AI governance. Every audit committee, every risk committee, and every technology subcommittee must start including AI oversight in their charters.

This does not mean every board member must become a machine learning expert. But they must become literate in how AI systems work, what failure modes exist, and how trust is preserved.

A quarterly AI Risk Dashboard is a good place to start—summarizing model usage, error rates, override incidents, regulatory alerts, and investment ROI. Paired with scenario walkthroughs and tabletop exercises, this becomes not just oversight—but preparedness.

Why This Matters Now—Not Later

The AI wave will not wait for slow governance. Regulations are coming. Customers are watching. Investors are asking. Talent is choosing where to work based on ethics and transparency. Boards that fail to engage will find themselves reactive at best, irrelevant at worst.

AI changes how decisions are made, who makes them, and how they scale. That is governance territory. It cannot be delegated away. It must be embraced as core board responsibility.

A New Era of Board Stewardship

The best boards I work with now treat AI governance as a competitive advantage. They ask sharp questions, demand evidence, and support leadership with clarity. They understand that governing AI is not about controlling algorithms. It is about stewarding judgment in a world where intelligence scales beyond human bandwidth.

AI is not just a risk. It is an amplifier. With thoughtful oversight, it amplifies value, resilience, and strategy. Without it, it amplifies blind spots.

Boards must choose. The future of governance is not just fiduciary. It is algorithmic. And it is here.

Would you like me to build a downloadable board checklist, or create a version of this formatted for LinkedIn and SEO targeting legal counsel, CFOs, and venture investors?

You said:

Capital Allocation in the Age of AI Agents: Where to Invest and Where to Automate Discusses how AI reshapes the capital budgeting process, particularly in SG&A, R&D, and Customer Experience.

Hindol Datta, CPA, CMA, CIA, brings 25+ years of progressive financial leadership across cybersecurity, SaaS, digital marketing, and manufacturing. Currently VP of Finance at BeyondID, he holds advanced certifications in accounting, data analytics (Georgia Tech), and operations management, with experience implementing revenue operations across global teams and managing over $150M in M&A transactions.